Understanding the Indexing Challenge

Have you ever poured hours into creating the perfect piece of content, only to discover it’s not showing up in search results? You’re not alone. While many SEO professionals obsess over rankings and keywords, they often overlook a fundamental prerequisite: getting their content indexed in the first place.

Indexing is the process by which search engines discover, crawl, and add your web pages to their searchable database. Without indexing, your content simply doesn’t exist as far as search engines are concerned. It’s like having the most beautiful store in the world, but the doors are locked and no one can come inside.

For large websites with thousands or even millions of pages, ensuring complete indexing presents a significant challenge. Search engines have limited resources and must make decisions about which pages to index and which to ignore. This is particularly true for Google, which has become increasingly selective about what content it chooses to include in its index.

The sobering reality? Based on extensive data from enterprise-level websites, an average of 9% of valuable deep content pages — products, articles, listings, and other important pages — fail to get indexed by major search engines like Google and Bing. That’s potentially thousands of pages of content that remain invisible to searchers.

Why Indexing Matters More Than You Think

In the current digital landscape, indexing has taken on even greater importance than ever before. Here’s why:

The Foundation of All Search Visibility: Before your content can rank, it must be indexed. No indexing means no chance to appear in search results, regardless of your content’s quality or relevance.

Beyond Traditional Search: Indexing is becoming crucial not just for traditional search engine results pages (SERPs) but also for:

- AI-generated search results

- Google Discover feeds

- Shopping results

- News carousels

- Voice search responses

- AI assistants like Gemini, Claude, and ChatGPT

Retrieval-Augmented Generation (RAG): As AI tools increasingly rely on RAG systems to generate responses, being part of their indexed knowledge base becomes essential for visibility in AI-generated content.

Competitive Edge: If your content is indexed but your competitor’s isn’t, you gain an automatic advantage in search visibility, regardless of other ranking factors.

Revenue Impact: For e-commerce sites, each unindexed product page represents potential lost sales. For publishers, unindexed articles mean missed ad revenue and audience opportunities.

In essence, indexing isn’t just a technical SEO concern—it’s a fundamental business issue that directly impacts your digital visibility, traffic potential, and ultimately, your bottom line.

The “Discovered – Currently Not Indexed” Problem

One of the most frustrating indexing status messages in Google Search Console is “Discovered – currently not indexed.” This cryptic message indicates that Google has found your URL but has chosen not to add it to its index—at least not yet.

This status can persist for weeks or even months, leaving website owners wondering what they’re doing wrong and how they can fix it. Understanding why Google makes these decisions is key to addressing the problem.

Several factors can contribute to the “Discovered – currently not indexed” status:

Crawl Budget Limitations: Google allocates a specific “budget” of resources to crawl each website. If your site has too many low-value URLs consuming this budget, important pages might not get crawled or indexed.

Content Quality Assessment: Google evaluates whether a page adds unique value to its index. Content that appears thin, duplicative, or low-quality may be discovered but not indexed.

Site Architecture Issues: Poor internal linking, excessive pagination, or deeply nested pages can signal to Google that content isn’t important enough to index.

Technical Barriers: Slow page load times, server errors, or rendering problems can prevent proper indexing even when pages are discovered.

Content Freshness: Older content that hasn’t been updated may be deprioritized for indexing compared to newer pages.

The good news is that with the right strategy, you can overcome these challenges and significantly improve your indexing rate. Let’s dive into the nine proven steps that will help you resolve indexing issues and maximize your site’s visibility.

Step 1: Audit Your Content for Indexing Issues

Before you can fix indexing problems, you need to understand exactly what’s happening with your content. A comprehensive indexing audit is the crucial first step.

Setting Up Proper Sitemaps

The most effective approach is to segment your sitemaps by content type. Instead of having one massive sitemap for your entire website, create separate sitemaps for:

- Product pages

- Blog articles and guides

- Category/collection pages

- Videos

- News content

- Any other distinct content types on your site

This segmentation allows you to monitor indexing performance by content type, making it easier to identify patterns and issues specific to certain sections of your site.

Once you’ve created these segmented sitemaps, submit them in both Google Search Console and Bing Webmaster Tools. After a few days, you’ll be able to see detailed reports showing how many pages from each sitemap have been indexed and which ones are experiencing problems.

Analyzing Indexing Exclusions

When reviewing the data in Google Search Console’s “Pages” interface, pay special attention to the “Excluded” tab. This section will reveal why certain pages aren’t being indexed. All indexing issues typically fall into three main categories:

1. Poor SEO Directives

These are technical issues that you’ve inadvertently created through incorrect SEO implementations:

- Pages blocked by robots.txt: Your robots.txt file is instructing search engines not to crawl these pages.

- Incorrect canonical tags: You may have specified that another URL is the preferred version of this page.

- Noindex directives: Either through meta tags or HTTP headers, you’re explicitly telling search engines not to index these pages.

- 404 errors: Pages that return “not found” errors can’t be indexed.

- 301 redirects: Pages that redirect to other URLs won’t be indexed as distinct pages.

The solution for these issues is straightforward: remove these pages from your sitemaps or fix the technical directives that are preventing indexing.

2. Low Content Quality

If Google Search Console shows “Soft 404” or other content quality issues, your pages may not contain enough substantial content to warrant indexing. To address this:

- Ensure all SEO-relevant content is rendered server-side rather than relying on JavaScript to load important content.

- Improve the depth and uniqueness of the content on these pages.

- Add more valuable information that differentiates these pages from others on your site.

- Enhance the overall user experience and readability of the content.

3. Processing Issues

The most frustrating category includes status messages like “Discovered – currently not indexed” or “Crawled – currently not indexed.” These indicate that Google has found your content but has chosen not to add it to the index yet.

Processing issues require a more comprehensive approach, which we’ll cover in the subsequent steps of this guide.

Creating Indexing Benchmarks

Once you’ve conducted your initial audit, establish benchmarks for each content type:

- What percentage of product pages are currently indexed?

- What’s the indexing rate for your articles or blog posts?

- How quickly do new pages typically get indexed?

These benchmarks will help you measure progress as you implement the remaining steps in this guide. They also provide valuable context for setting realistic goals and expectations for your indexing improvement efforts.

Step 2: Submit a News Sitemap for Faster Article Indexing

If your website publishes articles, guides, or any form of editorial content, a News sitemap can significantly accelerate indexing—even if your content isn’t traditional “news.”

The Power of News Sitemaps

News sitemaps are specifically designed to help Google discover and index time-sensitive content more quickly. While they were originally created for news publishers, they can benefit any site that regularly publishes fresh content.

A properly formatted News sitemap includes specialized tags that provide additional context about your content:

<news:publication>– Information about the publishing organization<news:publication_date>– When the article was published<news:title>– The headline of the article<news:keywords>– Relevant keywords for the article

These additional signals help Google understand the content’s timeliness and relevance, potentially prioritizing it for faster indexing.

Creating an Effective News Sitemap

To create a News sitemap, follow these guidelines:

- Include only content published within the last 48 hours (Google specifically looks for recent content in News sitemaps)

- Use the proper XML namespace for News sitemaps

- Include required news-specific tags for each URL

- Keep the sitemap updated as new content is published

Here’s a simple example of how a News sitemap entry should be structured:

<?xml version="1.0" encoding="UTF-8"?>

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9"

xmlns:news="http://www.google.com/schemas/sitemap-news/0.9">

<url>

<loc>https://www.example.com/articles/seo-indexing-guide</loc>

<news:news>

<news:publication>

<news:name>Example Publication</news:name>

<news:language>en</news:language>

</news:publication>

<news:publication_date>2023-05-12T13:00:00Z</news:publication_date>

<news:title>Complete Guide to SEO Indexing</news:title>

</news:news>

</url>

</urlset>

Automating News Sitemap Updates

Since News sitemaps focus on recent content, manual updates are impractical. Set up an automated system that:

- Identifies newly published content

- Adds it to the News sitemap

- Removes content older than 48 hours

- Automatically resubmits the updated sitemap

This ensures that Google always has access to your freshest content through the News sitemap, maximizing the chances of rapid indexing.

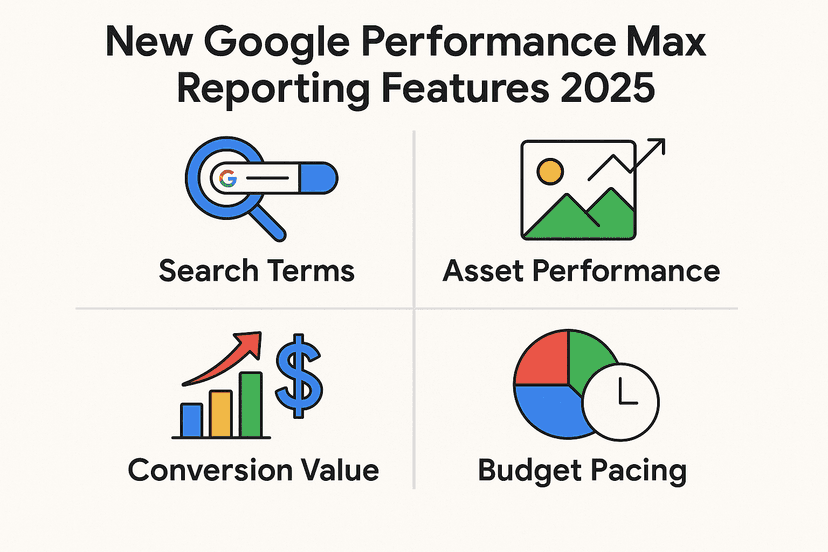

Step 3: Use Google Merchant Center Feeds to Improve Product Indexing

For e-commerce websites, Google Merchant Center offers a powerful alternative pathway to get your product pages indexed. While primarily designed for Shopping ads, submitting your product catalog to Merchant Center can significantly improve organic indexing as well.

The Merchant Center Advantage

Google’s Merchant Center creates a direct data feed between your product catalog and Google’s systems. This provides several indexing benefits:

- Google receives structured data about your products in a format it explicitly requests

- Product information is verified and validated against Google’s quality guidelines

- Updates to product information can be processed more efficiently than waiting for regular crawls

- The connection between paid and organic systems at Google creates cross-platform signals about your content’s validity

Setting Up an Effective Product Feed

To leverage Merchant Center for improved indexing:

-

Create a comprehensive product feed that includes all active products (not just ones you plan to advertise)

-

Ensure the feed includes high-quality data for all required attributes:

- Accurate product titles

- Detailed descriptions

- Current pricing

- Stock availability

- GTIN/MPN when applicable

- Clear categorization

- High-quality image URLs

-

Set up regular feed updates—daily at minimum, but ideally more frequently for sites with changing inventory or pricing

-

Address any feed errors or warnings promptly

Beyond Basic Feed Requirements

To maximize the indexing benefits, go beyond the minimum requirements:

- Include optional attributes like product dimensions, materials, and other relevant specifications

- Add multiple high-quality images when available

- Implement proper variant grouping for products with multiple colors, sizes, etc.

- Ensure landing page URLs in your feed match the canonical URLs you want indexed

Connecting Merchant Center and Search Console

For optimal results, link your Google Merchant Center and Search Console accounts. This connection helps Google understand the relationship between your product feeds and your website, potentially improving indexing coverage for your product pages.

While Merchant Center is specific to Google and certain product categories, it represents one of the most direct ways to communicate product information to Google’s systems and can significantly impact how quickly and completely your product pages get indexed.

Step 4: Leverage RSS Feeds for Faster Content Discovery

While XML sitemaps have become the standard for communicating with search engines about your content, RSS feeds offer unique advantages for accelerating content discovery and indexing.

The RSS Advantage

RSS (Really Simple Syndication) feeds were designed specifically for content distribution and have several characteristics that make them valuable for indexing:

- Chronological Ordering: RSS feeds naturally present content in reverse chronological order, highlighting your newest content

- Lightweight Format: The simple structure makes RSS feeds easy and quick for search engines to process

- Push Notification Support: RSS still supports WebSub (formerly PubSubHubbub), allowing for instant notifications when new content is published

- Focused Content: RSS feeds typically include only the most important content, reducing noise for crawlers

Creating an Effective RSS Feed for Indexing

To leverage RSS feeds for improved indexing:

- Create a dedicated RSS feed that includes only content published in the last 48 hours

- Include full content in the feed, not just excerpts (this gives search engines immediate access to your content)

- Ensure the feed is valid RSS 2.0 format

- Submit the feed URL in both Google Search Console and Bing Webmaster Tools’ Sitemaps sections

- Implement WebSub to notify hubs when new content is published

Here’s a sample structure for an effective RSS feed entry:

<item>

<title>Complete Guide to SEO Indexing</title>

<link>https://www.example.com/articles/seo-indexing-guide</link>

<pubDate>Tue, 12 May 2023 13:00:00 GMT</pubDate>

<description><![CDATA[Full article content goes here...]]></description>

<guid isPermaLink="true">https://www.example.com/articles/seo-indexing-guide</guid>

</item>

Implementing WebSub for Instant Notifications

WebSub is particularly valuable for indexing because it provides an instant push notification when new content is published, rather than waiting for search engines to discover it through regular crawling.

To implement WebSub:

-

Include the hub declaration in your RSS feed header:

<link rel="hub" href="https://pubsubhubbub.appspot.com/"> -

Set up your content management system to ping the hub whenever new content is published

-

Ensure your feed is publicly accessible and not behind authentication

While WebSub is no longer supported for XML sitemaps, it continues to function for RSS feeds, giving you a powerful tool for accelerating content discovery.

Step 5: Utilize Indexing APIs for Immediate Notification

For the most immediate indexing signals, direct API integrations with search engines provide the fastest path to discovery. Both Microsoft and Google offer APIs that allow you to directly notify their systems about new or updated content.

IndexNow: The Universal Indexing Protocol

IndexNow is an open protocol developed by Microsoft and supported by multiple search engines including Bing and Yandex. It allows websites to instantly notify search engines about content changes, with these key advantages:

- Unlimited Submissions: There are no daily limits on how many URLs you can submit

- Simple Implementation: The API requires minimal technical overhead

- Broad Support: Multiple search engines support the same protocol

- Immediate Processing: URLs are typically processed within seconds or minutes

To implement IndexNow:

- Generate a verification key and place it at the root of your domain

- Submit URLs when content is created, updated, or deleted using a simple HTTP request

- Integrate the API calls into your content management workflow for automatic notification

Google Indexing API: Limited But Powerful

Google also offers an Indexing API, though with more restrictions:

- Official Limitation: Google states the API is only for “job posting” or “broadcast event” schema markup pages

- Daily Quota: Standard accounts are limited to 200 API calls per day

- Authentication Required: You’ll need to set up Google Cloud credentials

Despite these limitations, many SEO professionals have found the Google Indexing API to be effective even for content beyond its stated scope. If you’re willing to test boundaries, consider implementing it for your most critical pages regardless of content type.

To implement the Google Indexing API:

- Set up a Google Cloud project and enable the Indexing API

- Generate API credentials

- Implement the API calls in your content workflow

- Monitor quota usage and results

Strategic API Implementation

Since these APIs have different characteristics, implement them strategically:

- Use IndexNow for all content updates across your site

- Reserve Google Indexing API calls for your highest-priority pages

- Track which URLs you’ve submitted through each API

- Monitor indexing results to refine your submission strategy

By combining both APIs, you create multiple direct channels to notify search engines about your most important content changes, significantly accelerating the indexing process.

Step 6: Strengthen Internal Linking to Boost Indexing Signals

While external APIs and sitemaps provide explicit signals to search engines, internal linking remains one of the most powerful tools for improving indexing. Search engines primarily discover content through links, and the strength of your internal linking structure directly impacts which pages get prioritized for crawling and indexing.

The Link Equity Principle

Search engines assign different levels of importance to pages based on how they’re linked within your site. Pages with stronger internal linking signals are:

- Crawled more frequently

- Prioritized higher in the crawl queue

- More likely to be indexed

- Indexed more quickly when published or updated

For large sites with thousands of deep content pages, strategic internal linking becomes crucial for ensuring complete indexing.

Critical Internal Linking Elements

Focus on optimizing these key internal linking components:

Homepage Links

Your homepage typically has the highest authority on your site and passes the strongest signals to linked pages. Since you can’t link to every page from your homepage, create a strategic system:

- When a new URL is published, check it against your server log files

- Once Googlebot has crawled the URL for the first time, query the Google Search Console API

- If the response indicates the URL is not yet indexed (“URL is unknown to Google,” “Crawled, not indexed,” or “Discovered, not indexed”), add it to a special feed

- Use this feed to populate a dedicated section on your homepage for non-indexed content

- Continue checking the indexing status periodically and remove pages once they become indexed

This creates a dynamic, self-updating system that leverages your homepage’s authority to accelerate indexing for pages that need it most.

Related Content Blocks

The “Related Products,” “You Might Also Like,” or “Related Articles” sections are powerful indexing tools when properly optimized:

- Ensure these blocks are rendered server-side, not via JavaScript

- Include a mix of both popular and less-discovered content

- Update algorithms to occasionally feature newer or less-indexed content

- Use descriptive anchor text that includes relevant keywords

Navigation and Category Structures

Your site’s primary navigation and category structures distribute significant link equity:

- Keep important pages within 3 clicks of the homepage

- Use descriptive, keyword-rich navigation labels

- Implement proper breadcrumb navigation with schema markup

- Create logical category hierarchies that distribute link equity effectively

Pagination Implementation

For sites with paginated content, proper implementation is crucial:

- Use rel=”next” and rel=”prev” for proper sequence signals

- Include a “View All” option when practical

- Ensure paginated pages link to individual content pieces

- Implement proper canonical tags to avoid duplicate content issues

Dynamic Internal Linking Strategies

Beyond static linking structures, implement these dynamic strategies:

- Freshness Boosts: Temporarily increase internal links to new content for the first 30 days

- Indexing Recovery: Create special sections linking to valuable pages that have fallen out of the index

- Strategic Interlinking: Ensure every new piece of content links to at least 3-5 other relevant pages

- Authority Distribution: Identify your highest-authority pages through analytics and use them to strategically link to pages needing indexing help

By systematically strengthening your internal linking structure, you create clearer signals about which pages matter most, helping search engines make better decisions about what to index and prioritize.

Step 7: Block Non-SEO Relevant URLs from Crawlers

While getting your important content indexed is crucial, equally important is preventing search engines from wasting resources on irrelevant pages. Every site has URLs that provide no SEO value and can actively harm your indexing efficiency if crawled.

The Crawl Budget Connection

Search engines allocate a limited “crawl budget” to each website—the number of pages they’re willing to crawl within a certain timeframe. If this budget is wasted on low-value pages, your important content may not get discovered or recrawled frequently enough.

Additionally, a high proportion of low-quality pages can negatively impact how search engines perceive your site’s overall quality, potentially affecting indexing decisions.

Identifying Crawl Waste

Conduct regular log file analysis to identify URLs that are being crawled but provide no SEO value, such as:

- Faceted Navigation: Filter combinations that create nearly identical content

- Internal Search Pages: Results pages for site searches

- Pagination Extremes: Very deep pagination pages (page 20+)

- Session IDs and Tracking Parameters: URLs with unnecessary parameters

- User-Specific Content: Account pages, wishlists, shopping carts

- Administrative Functions: Login, registration, account management

- Duplicate Content Variations: Print versions, mobile versions with separate URLs

Implementing Effective Crawler Directives

Once you’ve identified these URLs, implement a multi-layered approach to block them:

1. Robots.txt Disallow

The first line of defense is your robots.txt file. Add disallow directives for paths and patterns that should never be crawled:

User-agent: *

Disallow: /search/

Disallow: /user/

Disallow: /cart/

Disallow: /print/

Disallow: /*?sort=

Disallow: /*?filter=

2. Apply Nofollow to Internal Links

Robots.txt alone isn’t enough, as search engines may still discover these URLs through links. For all links pointing to blocked paths:

- Add rel=”nofollow” attributes to prevent link equity transfer

- Implement this consistently across all site templates and components

- Include JavaScript-generated links in this strategy

<a href="/search?query=example" rel="nofollow">Search Results</a>

3. Extended Nofollow Implementation

Don’t forget to apply nofollow in these often-overlooked areas:

- Transactional email links

- PDF documents that link back to your site

- Redirected URLs that aren’t canonicalized

- Social sharing buttons

- User-generated content links

4. URL Parameter Management

In Google Search Console and Bing Webmaster Tools, use the URL parameter tools to explicitly tell search engines how to handle specific parameters:

- Identify parameters that create duplicate content

- Configure them to be ignored by crawlers

- Regularly audit parameter handling as your site evolves

Monitoring Crawler Behavior

After implementing these blocking strategies:

- Continue monitoring log files to ensure search engines respect your directives

- Watch for new patterns of inefficient crawling as your site evolves

- Regularly update your blocking strategy as needed

By systematically eliminating crawler waste, you redirect search engine attention to your valuable content, increasing the likelihood and efficiency of proper indexing.

Step 8: Use 304 Responses to Help Crawlers Prioritize New Content

One often-overlooked aspect of improving indexing efficiency is optimizing how your server responds when search engines recrawl your pages. By implementing proper HTTP status codes, you can help crawlers allocate more resources to discovering and indexing new content.

Understanding the Crawl Cycle

When search engines visit your site, they typically spend the majority of their time recrawling existing pages to check for updates. This process follows these steps:

- The crawler requests a page it has previously visited

- Your server responds with the page content and an HTTP status code

- The crawler compares the content to its previously stored version

- If changes are detected, the page is reprocessed for indexing

By default, most servers return a 200 OK status code for every successful request, forcing crawlers to download and compare the entire page content—even when nothing has changed.

The Power of 304 Not Modified

The HTTP 304 (Not Modified) status code offers a more efficient alternative:

- When a crawler requests a page, it includes an “If-Modified-Since” header with the date it last visited

- If the page hasn’t changed since that date, your server can respond with a 304 status

- This tells the crawler “nothing has changed” without sending the page content

- The crawler saves bandwidth and processing time, which it can redirect to discovering new content

Implementing 304 Responses

To effectively implement 304 responses:

- Enable ETag and Last-Modified Headers: Configure your web server to include these HTTP headers with every response

- Proper Cache-Control Headers: Set appropriate cache lifetimes based on content update frequency

- Content-Based ETags: Configure ETags based on content hashes rather than inode numbers

- Conditional Logic: Ensure your server checks modification dates properly before sending responses

Here’s a sample Apache configuration for implementing these headers:

<IfModule mod_expires.c>

ExpiresActive On

ExpiresByType text/html "access plus 1 hour"

ExpiresByType image/jpg "access plus 1 year"

ExpiresByType image/jpeg "access plus 1 year"

ExpiresByType image/gif "access plus 1 year"

ExpiresByType image/png "access plus 1 year"

ExpiresByType text/css "access plus 1 month"

ExpiresByType application/pdf "access plus 1 month"

ExpiresByType text/javascript "access plus 1 month"

ExpiresByType application/javascript "access plus 1 month"

</IfModule>

<IfModule mod_headers.c>

Header set Last-Modified expr=%{TIME_HTTP}

Header unset ETag

FileETag None

</IfModule>

Strategic 304 Implementation

Not all content should use the same 304 strategy. Implement a tiered approach:

- Frequently Updated Content: Set shorter cache times for news articles, product pages with changing inventory, etc.

- Static Content: Use longer cache times for content that rarely changes

- Critical Pages: Consider always serving 200 responses for your most important pages to ensure they’re regularly reprocessed

Measuring the Impact

To verify your 304 implementation is working:

- Check server logs to confirm search engines are receiving 304 responses

- Monitor the ratio of 200 vs. 304 responses over time

- Track whether crawling of new content increases

By reducing the resources search engines spend redownloading unchanged content, you effectively increase their capacity to discover and index new pages on your site.

Step 9: Manually Request Indexing for Hard-to-Index Pages

Despite implementing all the automated strategies outlined above, you may still encounter stubborn pages that resist indexing. For these critical cases, manual intervention can provide the final push needed to get your content into search engine indexes.

Google Search Console URL Inspection

Google Search Console offers a direct method to request indexing for specific URLs:

- Navigate to the URL Inspection tool

- Enter the full URL of the page you want to index

- Once Google retrieves the current information, click “Request Indexing”

- Google will typically process these requests with higher priority than regular crawling

Important Limitation: Google limits these manual submissions to approximately 10 URLs per day per property. Use these requests strategically for your most valuable content.

Maximizing Manual Submission Success

To improve the likelihood of successful indexing through manual requests:

-

Pre-Optimization: Before submission, ensure the page is fully optimized:

- Contains substantial, unique content

- Has proper meta tags and schema markup

- Loads quickly and renders properly

- Links to and from other relevant pages on your site

-

Strategic Timing: Submit requests during working hours in U.S. time zones (when Google’s systems may be more responsive)

-

Verification: After submission, check the status again after 24-48 hours to confirm indexing

-

Progressive Approach: If a page isn’t indexed after the first request, make meaningful improvements before trying again

Bing Webmaster Tools Submissions

For Bing, the manual submission process works differently:

- Navigate to the “URL submission” section in Bing Webmaster Tools

- Enter the URL you want indexed

- Click “Submit”

However, as noted earlier, testing indicates that using the IndexNow API provides similar results with less manual effort for Bing indexing requests.

Tracking Manual Submission Results

Keep a log of all manual submissions including:

- URL submitted

- Date of submission

- Status before submission

- Status after submission (check at 24h, 48h, and 7 days)

- Any changes made between submissions

This data helps identify patterns in what types of content or structural elements might be hindering automatic indexing, informing broader improvements to your site.

When Manual Submissions Fail

If repeated manual submissions don’t result in indexing, this signals deeper issues that require investigation:

- Content quality or uniqueness problems

- Technical issues preventing proper rendering

- Potential duplicate content with other pages

- Site-wide quality or authority issues

In these cases, focus on resolving the underlying problems rather than continuing to submit the same URLs.

Common Indexing Myths and Misconceptions

As with many aspects of SEO, indexing is surrounded by myths and misconceptions that can lead site owners astray. Let’s address some of the most persistent ones:

Myth #1: All Pages Should Be Indexed

Reality: Selective indexing is actually beneficial. Search engines prefer quality over quantity, and having too many low-value pages in the index can dilute your site’s perceived quality. Focus on getting your best, most valuable content indexed rather than aiming for 100% coverage.

Myth #2: Indexing Automatically Leads to Ranking

Reality: Being indexed is just the first step. Once indexed, a page still needs to compete on all ranking factors to appear in search results. Many indexed pages never rank for any meaningful queries simply because they don’t satisfy ranking criteria.

Myth #3: Submitting a Sitemap Guarantees Indexing

Reality: Sitemaps are suggestions, not commands. They help search engines discover your URLs, but inclusion in a sitemap does not guarantee a page will be indexed. Google specifically states that sitemaps are just one of many factors they consider when deciding what to index.

Myth #4: Noindex Tags Prevent Discovery

Reality: A noindex tag only prevents inclusion in the index; it doesn’t prevent crawling. Search engines may still discover and crawl these pages, potentially wasting crawl budget. For pages you truly don’t want search engines to see, combine noindex with either robots.txt blocking or nofollow on all internal links.

Myth #5: New Content Is Always Indexed Quickly

Reality: Indexing speed varies dramatically based on site authority, update frequency, and content quality. Major news sites might see new content indexed within minutes, while smaller sites may wait days or weeks for new pages to be indexed.

Myth #6: More Frequent Crawling Equals Better Rankings

Reality: While frequent crawling can help get content updates indexed faster, it has no direct impact on rankings. Many rarely-updated pages rank extremely well despite being crawled infrequently.

Myth #7: All Indexed Pages Appear in Site: Searches

Reality: The site: search operator in Google doesn’t show all indexed pages from a domain. It provides an estimate and sample, not a comprehensive list. Google Search Console provides more accurate indexing data.

Myth #8: JavaScript Content Can’t Be Indexed

Reality: Modern search engines can index JavaScript content, but it’s more challenging and resource-intensive. Server-side rendering or hybrid rendering approaches are still recommended for critical content to ensure more reliable indexing.

Measuring Indexing Success: Key Metrics to Track

Implementing the strategies outlined above is just the beginning. To ensure ongoing success, track these key metrics:

-

Index Coverage: Monitor the total number of indexed pages through Google Search Console. Compare this to your total number of valuable pages to assess coverage.

-

Indexing Rate by Content Type: Measure how quickly different types of content get indexed. For instance, track how long it takes for new product pages or articles to appear in search results.

-

Crawl Efficiency: Analyze server logs to determine what percentage of crawl requests result in meaningful content discovery versus wasted resources on non-SEO pages.

-

Index Turnover: Track how often pages fall out of the index and need to be reindexed. High turnover rates may indicate underlying quality or technical issues.

-

Traffic from Newly Indexed Pages: Measure the traffic contribution from recently indexed content to evaluate its value. This helps justify continued investment in indexing optimization.

-

API Submission Success Rates: If you’re using IndexNow or the Google Indexing API, track the percentage of submitted URLs that successfully get indexed within your target timeframe.

-

Manual Submission Outcomes: Log and analyze the results of manual indexing requests through Google Search Console and Bing Webmaster Tools to identify patterns in what types of content succeed or fail.

-

Content Freshness Impact: Evaluate how updates to existing content affect its indexing status and search performance. This helps optimize your content maintenance strategy.

By regularly monitoring these metrics, you can refine your indexing strategy, identify emerging issues early, and demonstrate the business impact of your SEO efforts. Remember, indexing is not a one-time fix but an ongoing process that requires continuous attention and optimization.

Future-Proofing Your Indexing Strategy

As search engines continue to evolve, maintaining an effective indexing strategy requires staying ahead of emerging trends and technologies. Here are key considerations for future-proofing your approach:

-

AI and Machine Learning Integration: As search engines increasingly rely on AI for indexing decisions, ensure your content is structured in ways that machine learning algorithms can easily understand. This includes implementing proper schema markup and maintaining clear content hierarchies.

-

Mobile-First Indexing Optimization: With Google’s shift to mobile-first indexing, regularly audit your mobile site’s performance, content parity, and technical implementation. Use tools like Google’s Mobile-Friendly Test to identify and fix issues.

-

Voice Search Readiness: Optimize your content for voice search queries by focusing on natural language patterns and question-based content structures. This helps ensure your content remains discoverable as voice search adoption grows.

-

Structured Data Expansion: Continuously expand and refine your use of structured data to help search engines understand your content’s context and purpose. Stay updated on new schema types and best practices.

-

Core Web Vitals Performance: Maintain strong Core Web Vitals scores, as page experience signals increasingly influence indexing and ranking decisions. Regularly monitor and optimize loading performance, interactivity, and visual stability.

-

Content Delivery Network (CDN) Optimization: Leverage modern CDNs to ensure fast content delivery worldwide, which can positively impact crawl efficiency and indexing speed.

-

Security and HTTPS Maintenance: Keep your site’s security protocols up-to-date and maintain proper HTTPS implementation. Security issues can prevent proper indexing and damage user trust.

-

International and Multilingual Support: If you serve global markets, implement hreflang tags correctly and maintain consistent international site structures to ensure proper indexing across regions.

-

Emerging Technologies Adoption: Stay informed about new indexing protocols and technologies. For example, explore how Programmatic Advertising and other advanced targeting methods can complement your organic indexing efforts.

-

Continuous Education and Adaptation: The SEO landscape changes rapidly. Invest in ongoing education through resources like [SEO Thailand](https://relevant